1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

|

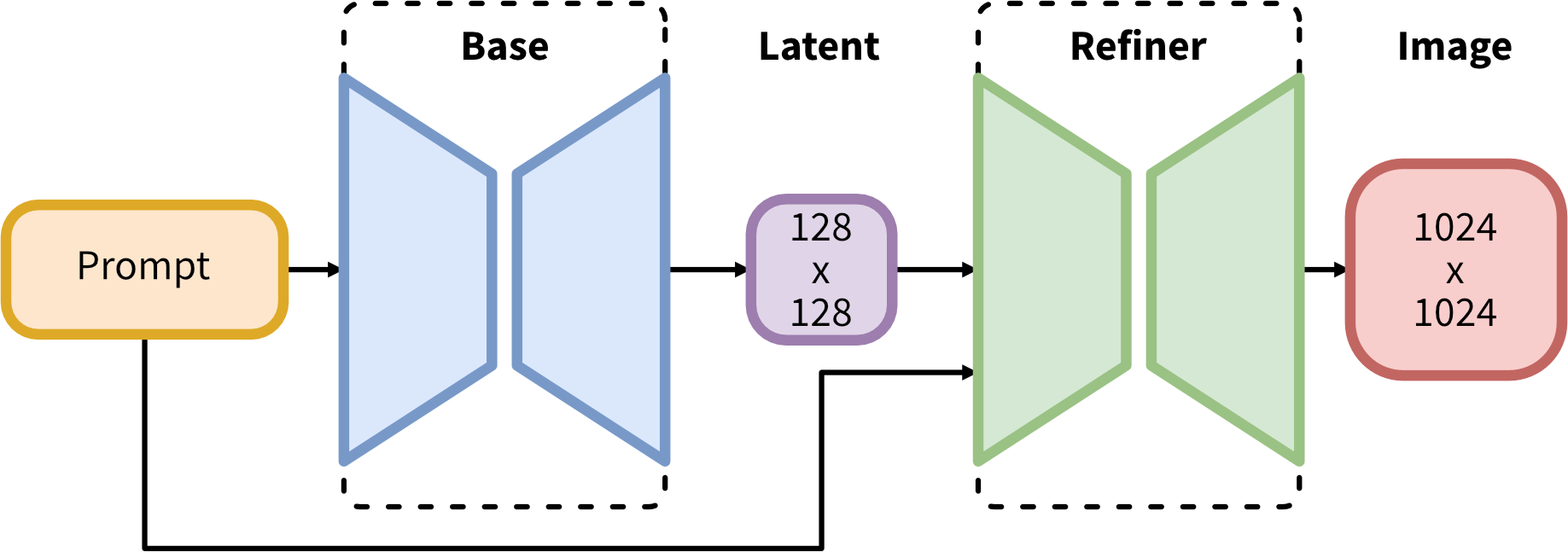

import numpy as np

import gradio as gr

from diffusers import DiffusionPipeline,StableDiffusionXLImg2ImgPipeline

import torch

import tqdm

from datetime import datetime

from TorchDeepDanbooru import deep_danbooru_model

MODEL_BASE = "stabilityai/stable-diffusion-xl-base-1.0"

MODEL_REFINER = "stabilityai/stable-diffusion-xl-refiner-1.0"

print("Loading model",MODEL_BASE)

base = DiffusionPipeline.from_pretrained(MODEL_BASE, torch_dtype=torch.float16, use_safetensors=True, variant="fp16")

base.to("cuda")

print("Loading model",MODEL_REFINER)

refiner = StableDiffusionXLImg2ImgPipeline.from_pretrained(MODEL_REFINER, text_encoder_2=base.text_encoder_2,vae=base.vae, torch_dtype=torch.float16, use_safetensors=True, variant="fp16",)

refiner.to("cuda")

default_n_steps = 40

default_high_noise_frac = 0.8

default_num_images =2

def predit_txt2img(prompt,negative_prompt,model_selected,num_images,n_steps, high_noise_frac,cfg_scale):

start = datetime.now()

num_images=int(num_images)

n_steps=int(n_steps)

prompt, negative_prompt = [prompt] * num_images, [negative_prompt] * num_images

images_list = []

model_selected = model_selected

high_noise_frac=float(high_noise_frac)

cfg_scale=float(cfg_scale)

g = torch.Generator(device="cuda")

if model_selected == "sd-xl-base-1.0" or model_selected == "sd-xl-base-refiner-1.0":

images = base(

prompt=prompt,

negative_prompt=negative_prompt,

num_inference_steps=n_steps,

denoising_end=high_noise_frac,

guidance_scale=cfg_scale,

output_type="latent" if model_selected == "sd-xl-base-refiner-1.0" else "pil",

generator=g

).images

if model_selected == "sd-xl-base-refiner-1.0":

images = refiner(

prompt=prompt,

negative_prompt=negative_prompt,

num_inference_steps=n_steps,

denoising_start=high_noise_frac,

guidance_scale=cfg_scale,

image=images,

).images

for image in images:

images_list.append(image)

torch.cuda.empty_cache()

cost_time=(datetime.now()-start).seconds

print(f"cost time={cost_time},{datetime.now()}")

return images_list

def predit_img2img(prompt, negative_prompt,init_image, model_selected,n_steps, high_noise_frac,cfg_scale,strength):

start = datetime.now()

prompt = prompt

negative_prompt =negative_prompt

model_selected = model_selected

init_image = init_image

n_steps=int(n_steps)

high_noise_frac=float(high_noise_frac)

cfg_scale=float(cfg_scale)

strength=float(strength)

if model_selected == "sd-xl-refiner-1.0":

images = refiner(

prompt=prompt,

negative_prompt=negative_prompt,

num_inference_steps=n_steps,

denoising_start=high_noise_frac,

guidance_scale=cfg_scale,

strength = strength,

image=init_image,

).images

torch.cuda.empty_cache()

cost_time=(datetime.now()-start).seconds

print(f"cost time={cost_time},{datetime.now()}")

return images[0]

def interrogate_deepbooru(pil_image, threshold):

threshold =0.5

model = deep_danbooru_model.DeepDanbooruModel()

model.load_state_dict(torch.load('/home/wpsze/ML/huggingface_hub/stable-diffusion-xl-base-1.0/TorchDeepDanbooru/model-resnet_custom_v3.pt'))

model.eval().half().cuda()

pic = pil_image.convert("RGB").resize((512, 512))

a = np.expand_dims(np.array(pic, dtype=np.float32), 0) / 255

with torch.no_grad(), torch.autocast("cuda"):

x = torch.from_numpy(a).cuda()

y = model(x)[0].detach().cpu().numpy()

for n in tqdm.tqdm(range(10)):

model(x)

result_tags_out = []

for i, p in enumerate(y):

if p >= threshold:

result_tags_out.append(model.tags[i])

print(model.tags[i], p)

prompt = ', '.join(result_tags_out).replace('_', ' ').replace(':', ' ')

print(f"prompt={prompt}")

return prompt

def clear_txt2img(prompt, negative_prompt):

prompt = ""

negative_prompt = ""

return prompt, negative_prompt

def clear_img2img(prompt, negative_prompt, image_input,image_output):

prompt = ""

negative_prompt = ""

image_input = None

image_output = None

return prompt, negative_prompt,image_input,image_output

with gr.Blocks(title="Stable Diffusion",theme=gr.themes.Default(primary_hue=gr.themes.colors.blue))as demo:

with gr.Tab("Text-to-Image"):

model_selected = gr.Radio(["sd-xl-base-refiner-1.0","sd-xl-base-1.0"],show_label=False, value="sd-xl-base-refiner-1.0")

with gr.Row():

with gr.Column(scale=4):

prompt = gr.Textbox(label= "Prompt",lines=3)

negative_prompt = gr.Textbox(label= "Negative Prompt",lines=1)

with gr.Row():

with gr.Column():

n_steps=gr.Slider(20, 60, value=default_n_steps, label="Steps", info="Choose between 20 and 60")

high_noise_frac=gr.Slider(0, 1, value=0.8, label="Denoising Start at")

with gr.Column():

num_images=gr.Slider(1, 3, value=default_num_images, label="Gernerated Images", info="Choose between 1 and 3")

cfg_scale=gr.Slider(1, 20, value=7.5, label="CFG Scale")

with gr.Column(scale=1):

with gr.Row():

txt2img_button = gr.Button("Generate",size="sm")

clear_button = gr.Button("Clear",size="sm")

gallery = gr.Gallery(label="Generated images", show_label=False, elem_id="gallery",columns=int(num_images.value), height=800,object_fit='fill')

txt2img_button.click(predit_txt2img, inputs=[prompt, negative_prompt, model_selected,num_images,n_steps, high_noise_frac,cfg_scale], outputs=[gallery])

clear_button.click(clear_txt2img, inputs=[prompt, negative_prompt], outputs=[prompt, negative_prompt])

with gr.Tab("Image-to-Image"):

model_selected = gr.Radio(["sd-xl-refiner-1.0"],value="sd-xl-refiner-1.0",show_label=False)

with gr.Row():

with gr.Column(scale=1):

prompt = gr.Textbox(label= "Prompt",lines=2)

with gr.Column(scale=1):

negative_prompt = gr.Textbox(label= "Negative Prompt",lines=2)

with gr.Row():

with gr.Column(scale=3):

image_input = gr.Image(type="pil",height=512)

with gr.Column(scale=3):

image_output = gr.Image(height=512)

with gr.Column(scale=1):

img2img_deepbooru = gr.Button("Interrogate DeepBooru",size="sm")

img2img_button = gr.Button("Generate",size="lg")

clear_button = gr.Button("Clear",size="sm")

n_steps=gr.Slider(20, 60, value=40, step=10,label="Steps")

high_noise_frac=gr.Slider(0, 1, value=0.8, step=0.1,label="Denoising Start at")

cfg_scale=gr.Slider(1, 20, value=7.5, step=0.1,label="CFG Scale")

strength=gr.Slider(0, 1, value=0.3,step=0.1,label="Denoising strength")

img2img_deepbooru.click(fn=interrogate_deepbooru, inputs=image_input,outputs=[prompt])

img2img_button.click(predit_img2img, inputs=[prompt, negative_prompt, image_input, model_selected, n_steps, high_noise_frac,cfg_scale,strength], outputs=image_output)

clear_button.click(clear_img2img, inputs=[prompt, negative_prompt, image_input], outputs=[prompt, negative_prompt, image_input,image_output])

if __name__ == "__main__":

demo.launch(server_name="0.0.0.0", server_port=8000)

|